Colour difference coding in computing (unfinished)Billy Biggs <vektor@dumbterm.net> This paper attempts to explain the different standards that exist for digital images in stored as colour difference values: Y'CBCR or YUV formats. We introduce the standards involved, give a technical background to these colourspaces, and then look at how the standards specify encoded images. We then present case studies and how we believe conversions should be performed. 1 StandardsVideo is standardized by locality: each region of the world uses either a variant of NTSC, or a variant of PAL. Broadcasters standardize themselves even more specifically by the properties of the equipment they use and support. In computing, we are faced with the challenge of writing software that can handle digital recordings and captured images from all possible TV and digital video standards, and then modify our output to be displayed on any of these plus the many variants of PC CRTs or LCD screen. To deal with this effectively, we must be aware of the many standards in place and how to recognize them. Below is a list of important standards for understanding digital images. I describe them briefly here so I can refer to them later. You can order copies of SMPTE standards from the SMPTE website, or maybe find them at your local library. ITU standards can also be ordered from their website, but my university library had copies of at least the original CCIR specifications. 2.1 ITU-R BT.470The official title is "Conventional television systems", and it documents the 'standard' for composite video systems in use around the world: variants of NTSC, PAL and SECAM. This standard makes some interesting claims about video equipment calibration:

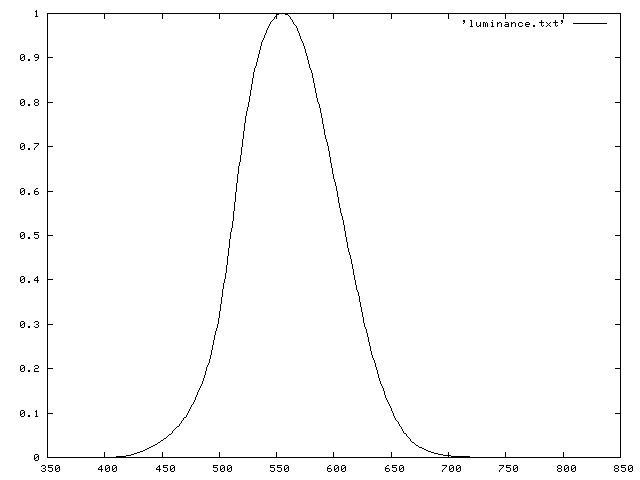

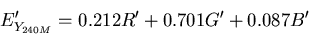

2.2 SMPTE 170M"This standard reflects modern practice in the generation of NTSC signals and, in some respects, differs from the original NTSC specification published in 1953." I have the 1999 revision of the 1994 standard. 2.3 ITU-R BT.601 (aka CCIR 601)This standardizes digital sampling for television: coefficients and excursion for Y'CBCR but no chromaticities. 2.4 SMPTE 240MThis standard is for 1125-line HDTV systems. I have the 1999 revision of the 1995 standard. 2.5 ITU-R BT.7092.6 JFIF JPEG images2.7 MPEG12 BackgroundThis section explains the aspects of digital image coding which lead to YUV encodings. 2.1 Colour perception and image codingHuman perception allows for about three degrees of freedom when representing colour. However, the eye is most sensitive to the area of the spectrum roughly in the center. This curve is called the luminous efficiency curve, or luminance, and measuring the luminance of an image can demonstrate how bright it will appear.

Between 60 and 70 percent of the luminance signal comprises green information. In image coding, we take advantage of this efficiency, function by ensuring that this data is sampled at a high rate. The simplest way to do this is to "remove" the luminance information from the blue and green signals to form a pair of colour difference components (Poynton). 2.2 Gamma and image codingThe output of a CRT is not a linear function of the applied signal. Instead, a CRT has a power-law response to voltage: the luminance produced at the face of the display is approximately proportional to the applied voltage raised to a power known as gamma. In order to preduct the luminance of the output, we must consider this gamma function when storing our colours. For image coding we can use gamma to our advantage. If images are stored as gamma-corrected values, instead of luminance values, then we don't need to do a lossy conversion when we output. For this reason, digital images in RGB format are almost always stored as gamma corrected R'G'B' values. 2.3 Chromaticities and RGB spacesMonitors and other display devices usually use three primaries to create colours: red, green and blue. Over the years there have been different standards for what exact colour of red, green and blue should be used, which has changed to adapt to changes in phosphor technology and availablility. 3 Colour difference coding3.1 Encoding pipelineFor compression, the final encoding pipeline is:

4 Differences in encodingBased on the above encoding pipeline, we can see where possibilities there are for differences in our output:

4.1 Differences in RGB chromaticities

4.2 Differences in gamma function

4.3 Differences in conversion coefficients

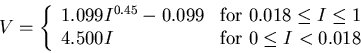

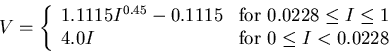

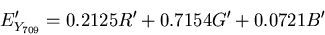

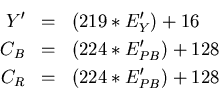

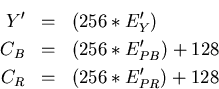

4.4 Differences in quantization levelsIn all of the ITU and SMPTE specifications, and in the MPEG2 spec, the analogue 0-1 values are converted to 8-bit components using the following formulae:  This gives excursions of 16-235 for Y', and 16-240 for both CB and CR. However, the JFIF standard for JPEG specifies the following conversion:  To quote: where the E'y, E'Cb and E'Cb are defined as in CCIR 601. Since values of E'y have a range of 0 to 1.0 and those for E'Cb and E'Cr have a range of -0.5 to +0.5, Y, Cb, and Cr must be clamped to 255 when they are maximum value.. This bugs me: why not use 255 in the above equation, instead of losing the last quantization level? 5 References

|